Configuring Hive on MR3 to access secure (Kerberized) HDFS requires the user to update several files:

kubernetes/env.shkubernetes/conf/core-site.xmlkubernetes/conf/yarn-site.xmlkubernetes/conf/hive-site.xml(forhive.mr3.dag.additional.credentials.source)

kubernetes/env.sh

In kubernetes/env.sh, the user specifies how to renew HDFS tokens:

$ vi kubernetes/env.sh

USER_PRINCIPAL=hive@RED

USER_KEYTAB=$KEYTAB_MOUNT_DIR/hive.keytab

KEYTAB_MOUNT_FILE=hive.keytab

TOKEN_RENEWAL_HDFS_ENABLED=true

USER_PRINCIPALspecifies the principal name to use when renewing HDFS and Hive tokens in DAGAppMaster and ContainerWorkers (formr3.principalinmr3-site.xml).USER_KEYTABandKEYTAB_MOUNT_FILEspecify the name of the keytab file which should be copied to the directorykubernetes/keyby the user. Note that we may reuse the service keytab file for connecting to Metastore or HiveServer2.TOKEN_RENEWAL_HDFS_ENABLEDshould be set to true in order to automatically renew HDFS tokens.

kubernetes/conf/core-site.xml

In kubernetes/conf/core-site.xml,

the user specifies the Kerberos principal name for the HDFS NameNode and

the address of the Hadoop key management server (KMS):

$ vi kubernetes/conf/core-site.xml

<property>

<name>fs.defaultFS</name>

<value>file:///</value>

</property>

<property>

<name>hadoop.security.authentication</name>

<value>kerberos</value>

</property>

<property>

<name>dfs.namenode.kerberos.principal</name>

<value>hdfs/red0@RED</value>

</property>

<property>

<name>dfs.encryption.key.provider.uri</name>

<value>kms://http@red0:9292/kms</value>

</property>

- It is important that

fs.defaultFSshould be setfile:///, not an HDFS address likehdfs://red0:8020. This is because from the viewpoint of HiveServer2 running in a Kubernetes cluster, the default file system is the local file system. In fact, HiveServer2 is not even aware that it is reading from a remote HDFS. - If the configuration key

dfs.namenode.kerberos.principalis not specified, Metastore may generatejava.lang.IllegalArgumentException, as in:org.apache.hadoop.hive.ql.metadata.HiveException: MetaException(message:Got exception: java.io.IOException DestHost:destPort blue0:8020 , LocalHost:localPort hivemr3-metastore-0.metastore.hivemr3.svc.cluster.local/10.44.0.1:0. Failed on local exception: java.io.IOException: Couldn't set up IO streams: java.lang.IllegalArgumentException: Failed to specify server's Kerberos principal name) - Set the configuration key

dfs.encryption.key.provider.uriif encryption is used in HDFS and Hive on MR3 should obtain credentials for accessing HDFS.

kubernetes/conf/yarn-site.xml

In kubernetes/conf/yarn-site.xml, the user specifies the principal name of the Yarn ResourceManager:

$ vi kubernetes/conf/yarn-site.xml

<property>

<name>yarn.resourcemanager.principal</name>

<value>rm/red0@RED</value>

</property>

kubernetes/conf/hive-site.xml

When using Kerberized HDFS,

the configuration key hive.mr3.dag.additional.credentials.source in hive-site.xml

should be set to a path on HDFS.

Usually it suffices to use the HDFS directory storing the warehouse, e.g.:

$ vi kubernetes/conf/hive-site.xml

<property>

<name>hive.mr3.dag.additional.credentials.source</name>

<value>hdfs://hdfs.server:8020/hive/warehouse/</value>

</property>

If hive.mr3.dag.additional.credentials.source is not set,

executing a query with no input files

(e.g., creating a fresh table or inserting values to an existing table)

generates no HDFS tokens and may fail with

org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS].

Accessing non-secure HDFS

Since it is agnostic to the type of data sources, Hive on MR3 can access multiple data sources simultaneously (e.g. by joining tables from two separate Hadoop clusters). The only restriction is that it must use common KDC and KMS.

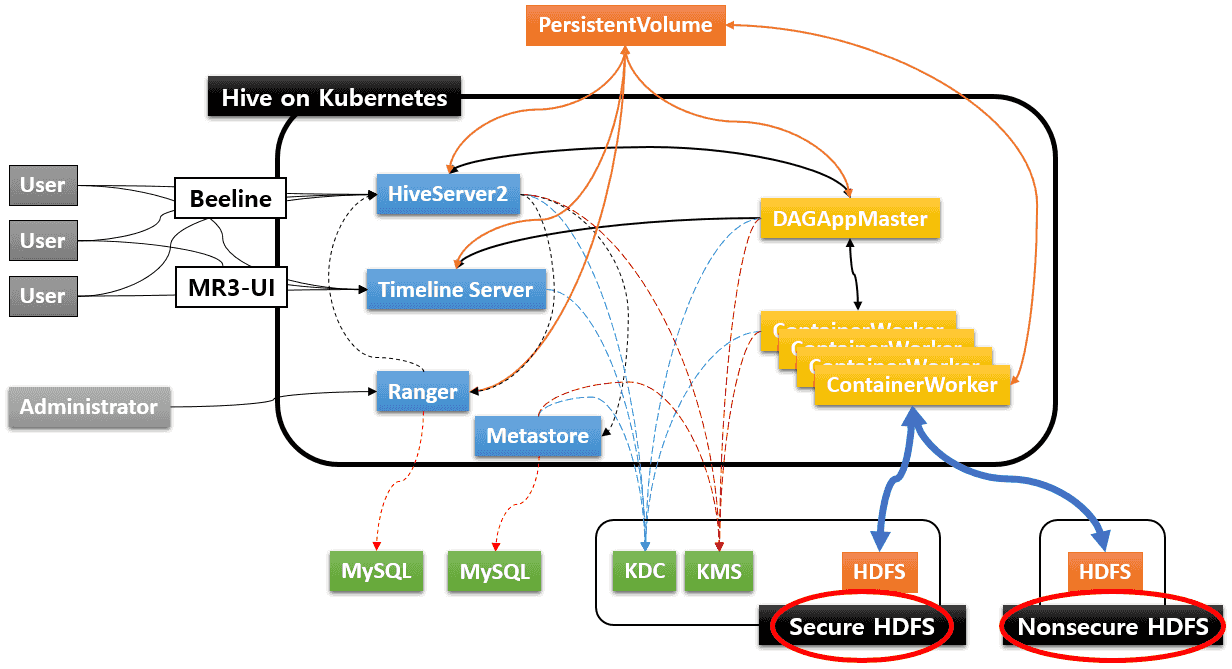

Below we illustrate how to use a nonsecure HDFS as another remote data source in addition to an existing secure HDFS, as depicted in the following diagram.

We assume that the secure HDFS runs on red0 and the nonsecure HDFS runs on gold0.

If a nonsecure HDFS is the only data source while Kerberos-based authentication is used, the configuration key

dfs.encryption.key.provider.uri(orhadoop.security.key.provider.path) should not be set incore-site.xml.Because of bugs in Hive and its dependency on Hadoop libraries, however, accessing tables in Text and Parquet formats may still generate errors after failing to obtain credentials (e.g., in

FileInputFormat.listStatus()). In such a case, try to create tables in ORC format.0: jdbc:hive2://blue0:9852/> create table if not exists foo as SELECT 'foo' as foo; 0: jdbc:hive2://blue0:9852/> select * from foo; ... Error: java.io.IOException: java.net.ConnectException: Call From null to blue0:9292 failed on connection exception: java.net.ConnectException: Error while authenticating with endpoint: http://blue0:9292/kms/v1/?op=GETDELEGATIONTOKEN&renewer=hive%2Fhiveserver2-internal.hivemr3.svc.cluster.local%40PL; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused (state=,code=0) 0: jdbc:hive2://blue0:9852/> drop table foo; 0: jdbc:hive2://blue0:9852/> create table if not exists foo stored as parquet as SELECT 'foo' as foo; 0: jdbc:hive2://blue0:9852/> select * from foo; ... Error: java.io.IOException: java.net.ConnectException: Call From null to blue0:9292 failed on connection exception: java.net.ConnectException: Error while authenticating with endpoint: http://blue0:9292/kms/v1/?op=GETDELEGATIONTOKEN&renewer=hive%2Fhiveserver2-internal.hivemr3.svc.cluster.local%40PL; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused (state=,code=0) 0: jdbc:hive2://blue0:9852/> drop table foo; 0: jdbc:hive2://blue0:9852/> create table if not exists foo stored as orc as SELECT 'foo' as foo; 0: jdbc:hive2://blue0:9852/> select * from foo; ... +----------+ | foo | +----------+

kubernetes/conf/core-site.xml

As the first step, we allow Hive to read from secure HDFS and nonsecure HDFS by setting the configuration key ipc.client.fallback-to-simple-auth-allowed in kubernetes/conf/core-site.xml.

$ vi kubernetes/conf/core-site.xml

<property>

<name>hadoop.security.authentication</name>

<value>kerberos</value>

</property>

<property>

<name>ipc.client.fallback-to-simple-auth-allowed</name>

<value>true</value>

</property>

Impersonation issue

Usually it is impersonation issues that prevent access to nonsecure HDFS.

Assuming that HIVE_SERVER2_KERBEROS_PRINCIPAL is set to hive/indigo20@RED in kubernetes/env.sh, creating an external table from nonsecure HDFS may generate an error message shown below.

2019-07-23 14:33:49,950 INFO ipc.Server (Server.java:authorizeConnection(2235)) - Connection from 10.1.91.38:57090 for protocol org.apache.hadoop.hdfs.protocol.ClientProtocol is unauthorized for user gitlab-runner (auth:PROXY) via hive/indigo20@RED (auth:SIMPLE)

2019-07-23 14:33:49,951 INFO ipc.Server (Server.java:doRead(1006)) - Socket Reader #1 for port 8020: readAndProcess from client 10.1.91.38 threw exception [org.apache.hadoop.security.authorize.AuthorizationException: User: hive/indigo20@RED is not allowed to impersonate gitlab-runner]

Here an ordinary user gitlab-runner runs Beeline and tries to create an external table from a directory on HDFS running on gold0.

As indicated by the error message, NameNode on gold0 should allow hive/indigo20@RED to impersonate gitlab-runner.

This requires two changes in core-site.xml on gold0:

- The configuration key

hadoop.proxyuser.hive.usersshould be set to*orgitlab-runnerso thathivecan impersonategitlab-runnerongold0(where the nonsecure HDFS runs). - The configuration key

hadoop.security.auth_to_localshould be set so that userhive/indigo20@REDcan be mapped to userhivein auth_to_local rules, as shown in the following example.RULE:[2:$1@$0](hive@RED)s/.*/hive/ RULE:[1:$1@$0](hive@RED)s/.*/hive/ DEFAULT

Then the user (or the administrator user of gold0) should restart NameNode, and the impersonation issue should disappear.

Note that the impersonation issue arises because of accessing nonsecure HDFS and has nothing to do with the value of the configuration key hive.server2.enable.doAs in kubernetes/conf/hive-site.xml.

That is, even if hive.server2.enable.doAs is set to false, the user may still see the impersonation issue.

Now the user with proper permission can create an external table from the nonsecure HDFS.

0: jdbc:hive2://10.1.91.41:9852/> create external table call_center_gold(

. . . . . . . . . . . . . . . . > cc_call_center_sk bigint

...

. . . . . . . . . . . . . . . . > , cc_tax_percentage double

. . . . . . . . . . . . . . . . > )

. . . . . . . . . . . . . . . . > stored as orc

. . . . . . . . . . . . . . . . > location 'hdfs://gold0:8020/tmp/hivemr3/warehouse/tpcds_bin_partitioned_orc_2.db/call_center';

...

INFO : OK

No rows affected (0.256 seconds)