Hive on MR3 needs a PersistentVolume for several purposes:

- HiveServer2 distributes resources for user defined functions (UDFs) using the PersistentVolume.

- MR3 DAGAppMaster uses the PersistentVolume for staging directories where DAG recovery files (

dag.submitted,dag.summary,dag.reported) are kept. - MR3 ContainerWorkers use the PersistentVolume to send the results of queries back to HiveServer2.

On Amazon EKS, we usually create PersistentVolumes using EFS. See Using S3 instead of EFS to learn how to use S3 instead of PersistentVolumes.

There are two methods to create a PersistentVolume using EFS:

- With the Amazon EFS CSI driver

- With an external storage provisioner

The first methods works only with kubectl version 1.14 and later.

For running Hive on MR3, the second method is slightly simpler. The quick start guide On EKS with Autoscaling uses the second method.

1. With the Amazon EFS CSI driver

Deploy the Amazon EFS CSI driver to an EKS cluster. For the instruction, see AWS User Guide.

Obtain the CIDR range of the VPC of the EKS cluster.

$ aws eks describe-cluster --name hive-mr3 --query "cluster.resourcesVpcConfig.vpcId" --output text

vpc-0286f0b6b501ada72

$ aws ec2 describe-vpcs --vpc-ids vpc-0286f0b6b501ada72 --query "Vpcs[].CidrBlock" --output text

192.168.0.0/16

Open the AWS console and create a new security group that allows inbound NFS traffic for EFS mount points. The security group should use the VPC of the EKS cluster and include an inbound rule: 1) of type NFS; 2) using the CIDR range obtained in the previous step.

Create EFS on the AWS Console. When creating EFS, choose the VPC of the EKS cluster. For every mount target, use the security group created in the previous step. Change the permission of EFS if necessary.

Retrieve your Amazon EFS file system ID.

$ aws efs describe-file-systems --query "FileSystems[*].FileSystemId" --output text

fs-21726c00

Set the file system ID in kubernetes/efs-csi/workdir-pv.yaml.

$ vi kubernetes/efs-csi/workdir-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: workdir-pv

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: efs-sc

csi:

driver: efs.csi.aws.com

volumeHandle: fs-21726c00

Create a new storage class efs-sc.

$ kubectl create -f kubernetes/efs-csi/sc.yaml

storageclass.storage.k8s.io/efs-sc created

Create a new PersistentVolumeClaim workdir-pvc.

There is no limit in the capacity of the PersistentVolumeClaim workdir-pvc, so the user can ignored its nominal capacity 1Mi.

All Pods of Hive on MR3 will share the PersistentVolumeClaim workdir-pvc.

$ kubectl create -f kubernetes/efs-csi/workdir-pv.yaml

persistentvolume/workdir-pv created

$ kubectl create -f kubernetes/efs-csi/workdir-pvc.yaml

persistentvolumeclaim/workdir-pvc created

$ kubectl get pv -n hivemr3

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

workdir-pv 5Gi RWX Retain Bound hivemr3/workdir-pvc efs-sc 4m8s

$ kubectl get pvc -n hivemr3

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

workdir-pvc Bound workdir-pv 5Gi RWX efs-sc 3m25s

2. With an external storage provisioner

When using an external storage provisioner, the user should take the following steps: 1) create EFS for the EKS cluster; 2) if necessary, configure security groups so that the EKS cluster can access EFS; 3) create a StorageClass for EFS.

1) Creating EFS

The user can create EFS on the AWS Console. When creating EFS, choose the VPC of the EKS cluster. Make sure that a mount target is created for each Availability Zone. If the user can choose the security group for mount targets, use the security group for the EKS cluster.

For creating EFS using AWS CLI, see the quick start guide On EKS with Autoscaling.

2) Configuring security groups when necessary

If the user cannot choose the security group for mount targets, security groups should be configured manually. Identify two security groups: 1) the security group for the EC2 instances constituting the EKS cluster; 2) the security group associated with the EFS mount targets.

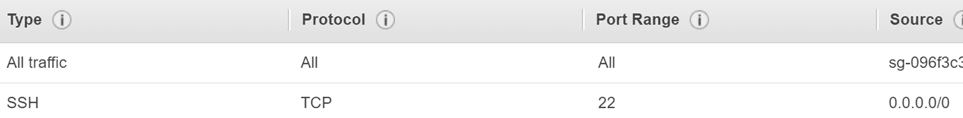

For the first security group (for the EC2 instances), add a rule to allow inbound access using Secure Shell (SSH) from any host so that EFS can access the EKS cluster, as shown below:

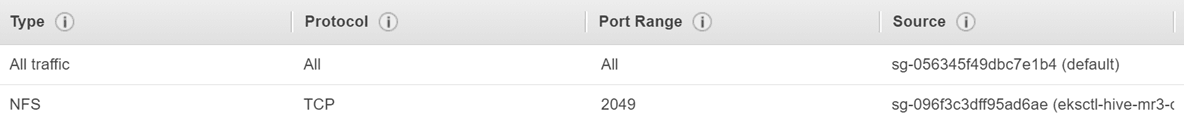

For the second security group, add a rule to allow inbound access using NFS from the first security group so that the EKS cluster can access EFS, as shown below:

Here

Here sg-096f3c3dff95ad6ae is the first security group.

In order to check if the EKS cluster can mount EFS to a local directory in its EC2 instances,

get the file system ID of EFS (e.g., fs-0440de65) and the region ID (e.g., ap-northeast-2).

Then log on to an EC2 instance and execute the following commands.

$ mkdir -p /home/ec2-user/efs

$ sudo mount -t nfs -o nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport fs-0440de65.efs.ap-northeast-2.amazonaws.com:/ /home/ec2-user/efs

If the security groups are properly configured, EFS is mounted to the directory /home/ec2-user/efs.

3) Creating a StorageClass

We use the external storage provisioner for AWS EFS (available at https://github.com/kubernetes-incubator/external-storage) to create a StorageClass for EFS. The external storage provisioner assists PersistentVolumeClaims asking for the StorageClass for EFS by creating PersistentVolumes on EFS.

For creating a StorageClass for EFS,

the directory kubernetes/efs contains four YAML files for starting the storage provisioner for EFS and creating a PersistentVolumeClaim workdir-pvc.

Set the file system ID of EFS, the region ID, and the NFS server address in kubernetes/efs/manifest.yaml.

$ vi kubernetes/efs/manifest.yaml

data:

file.system.id: fs-0440de65

aws.region: ap-northeast-2

provisioner.name: example.com/aws-efs

dns.name: ""

spec:

template:

spec:

volumes:

- name: pv-volume

nfs:

server: fs-0440de65.efs.ap-northeast-2.amazonaws.com

path: /

Then execute the script kubernetes/mount-efs.sh which uses the namespace specified by the environment variable MR3_NAMESPACE in kubernetes/env.sh.

$ kubernetes/mount-efs.sh

The user can find a new StorageClass aws-efs, a new Pod (e.g., efs-provisioner-785b649d46-pt8rh) running the storage provisioner, and a new PersistentVolumeClaim workdir-pvc.

There is no limit in the capacity of the PersistentVolumeClaim workdir-pvc, so the user can ignored its nominal capacity 1Mi.

All Pods of Hive on MR3 will share the PersistentVolumeClaim workdir-pvc.

$ kubectl get sc

NAME PROVISIONER AGE

aws-efs example.com/aws-efs 90s

gp2 (default) kubernetes.io/aws-ebs 48m

$ kubectl get pods -n hivemr3

NAME READY STATUS RESTARTS AGE

efs-provisioner-785b649d46-pt8rh 1/1 Running 0 65s

$ kubectl get pvc -n hivemr3

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

workdir-pvc Bound pvc-4cf7cdeb-c41f-11e9-8776-0a0b4be6c9fa 1Mi RWX aws-efs 112s

The user can verify that a new directory for the PersistentVolumeClaim workdir-pvc has been created on EFS.

$ kubectl exec -n hivemr3 -it efs-provisioner-785b649d46-pt8rh -- apk add bash

$ kubectl exec -n hivemr3 -it efs-provisioner-785b649d46-pt8rh -- /bin/bash

$ ls /persistentvolumes # inside the storage provisioner Pod

/persistentvolumes/workdir-pvc-pvc-4cf7cdeb-c41f-11e9-8776-0a0b4be6c9fa

Copying a MySQL connector jar file to EFS

Assume that the Docker image does not contain a MySQL connector jar file and

that Metastore does not automatically download such a jar file.

Then the user should manually copy a MySQL connector jar file to a subdirectory /lib of the directory on EFS

so that a MySQL connector becomes available to every Pod.

Log on to an EC2 instance and mount EFS to a local directory.

$ mkdir -p /home/ec2-user/efs

$ sudo mount -t nfs -o nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport fs-0440de65.efs.ap-northeast-2.amazonaws.com:/ /home/ec2-user/efs

Then find the directory for the PersistentVolumeClaim workdir-pvc and create a subdirectory /lib.

$ ls /home/ec2-user/efs/

workdir-pvc-pvc-4cf7cdeb-c41f-11e9-8776-0a0b4be6c9fa

$ sudo mkdir -p /home/ec2-user/efs/workdir-pvc-pvc-4cf7cdeb-c41f-11e9-8776-0a0b4be6c9fa/lib

$ cd /home/ec2-user/efs/workdir-pvc-pvc-4cf7cdeb-c41f-11e9-8776-0a0b4be6c9fa/lib

$ sudo chmod 777 .

Alternatively the user can connect directly to the storage provisioner Pod and create a subdirectory /lib under the directory for the PersistentVolumeClaim workdir-pvc.

After creating a subdirectory /lib, copy the jar file (e.g., by downloading it from the internet).

$ wget http://your.server.address/mysql-connector-java-8.0.12.jar

$ ls

mysql-connector-java-8.0.12.jar

In order for Metastore to use the MySQL connector provided by the user,

the Metastore Pod should use the PersistentVolumeClaim workdir-pvc.

Edit kubernetes/yaml/metastore.yaml and uncomment the following block:

$ vi kubernetes/yaml/metastore.yaml

spec:

template:

spec:

containers:

volumeMounts:

- name: work-dir-volume

mountPath: /opt/mr3-run/lib

subPath: lib

volumes:

- name: work-dir-volume

persistentVolumeClaim:

claimName: workdir-pvc