The user can start Metastore by executing the script kubernetes/run-metastore.sh as explained in Running Metastore.

The user can also start HiveServer2 by executing the script kubernetes/run-hive.sh as explained in Running HiveServer2.

After running several queries, the user can find DAGAppMaster and ContainerWorker Pods as well.

$ kubectl get pods -n hivemr3

NAME READY STATUS RESTARTS AGE

efs-provisioner-6947dc8bcc-9f4hq 1/1 Running 0 3h59m

hivemr3-hiveserver2-lmpv7 1/1 Running 0 159m

hivemr3-metastore-0 1/1 Running 0 3h25m

mr3master-9802-0 1/1 Running 0 158m

mr3worker-392c-2 1/1 Running 0 152m

Below we review additional steps for running Metastore and HiveServer2.

Placing the Metastore Pod

The user should update the file kubernetes/yaml/metastore.yaml so as to place the Metastore Pod in the mr3-master node group.

In order to place the Metastore Pod in the mr3-master node group,

add a nodeAffinity rule to kubernetes/yaml/metastore.yaml.

$ vi kubernetes/yaml/metastore.yaml

spec:

template:

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: roles

operator: In

values:

- "masters"

Here we set the key field to roles and the values field to masters

because the mr3-master node group is created with a label roles: masters.

For HiveServer2, we do not need such a nodeAffinity rule because the existing podAffinity rule places the HiveServer2 Pod on the same node where the Metastore Pod runs.

Configuring the LoadBalancer

Executing the script kubernetes/run-hive.sh creates a new LoadBalancer for HiveServer2.

Get the security group associated with the LoadBalancer.

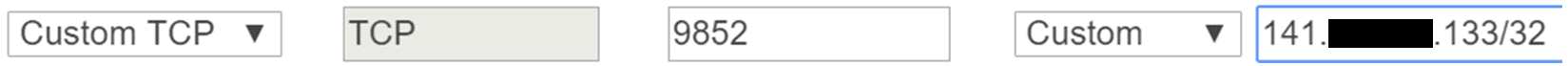

If necessary, edit the inbound rule in order to restrict the source IP addresses (by changing the source from 0.0.0.0/0 to (IP address)/32.

The LoadBalancer disconnects Beeline showing no activity for the idle timeout period, which is 60 seconds by default. The user may want to increase the idle timeout period, e.g., to 600 seconds. Otherwise Beeline loses the connection to HiveServer2 even after a brief period of inactivity.

The LoadBalancer sends ping signals to HiveServer2 to check its health.

Since it interprets a ping signal as an invalid request, HiveServer2 may print ERROR messages due to org.apache.thrift.transport.TSaslTransportException.

2019-08-25T17:52:06,351 ERROR [HiveServer2-Handler-Pool: Thread-29] server.TThreadPoolServer: Error occurred during processing of message.

java.lang.RuntimeException: org.apache.thrift.transport.TSaslTransportException: No data or no sasl data in the stream

...

This message can be ignored, but if the user wants to see fewer such messages, increase the ping interval of the LoadBalancer.

Finding the address of HiveServer2

To run Beeline, get the LoadBalancer Ingress of the Service hiveserver2.

$ kubectl describe service -n hivemr3 hiveserver2

Name: hiveserver2

...

IP: 10.100.143.232

External IPs: 15.164.175.87

LoadBalancer Ingress: a4e5adbd2c7e011e9a34c0a411ce04be-1099963422.ap-northeast-2.elb.amazonaws.com

...

Get the IP address of the LoadBalancer Ingress.

$ nslookup a4e5adbd2c7e011e9a34c0a411ce04be-1099963422.ap-northeast-2.elb.amazonaws.com

...

Non-authoritative answer:

Name: a4e5adbd2c7e011e9a34c0a411ce04be-1099963422.ap-northeast-2.elb.amazonaws.com

Address: 52.78.11.202

Name: a4e5adbd2c7e011e9a34c0a411ce04be-1099963422.ap-northeast-2.elb.amazonaws.com

Address: 52.79.86.206

In this example, the user can use 52.78.11.202 as the IP address of HiveServer2 when running Beeline.

This is because Beeline connects first to the LoadBalancer, not directly to HiveServer2.