This page shows how to use TypeScript code for generating YAML files and pre-built Docker images available at DockerHub in order to operate Hive/Spark on MR3 on Amazon EKS. By following the instruction, the user will learn:

- how to use TypeScript code to run Hive on MR3, Spark on MR3, Ranger, MR3-UI, Grafana, and Superset on Amazon EKS, where Hive/Spark on MR3 is configured to use autoscaling.

This scenario has the following prerequisites:

- The user can create IAM policies.

- The user has access to an S3 bucket storing the warehouse and all S3 buckets containing datasets.

- The user can create an EKS cluster with the command

eksctl. - The user can configure LoadBalancers.

- The user can create EFS.

- A database server for Metastore is ready and accessible from the EKS cluster.

- A database server for Ranger is ready and accessible from the EKS cluster. It is okay to use the same database server for Metastore.

- The user can run Beeline to connect to HiveServer2 running at a given address.

The user may create new resources (such as IAM policies) either on the AWS console or by executing AWS CLI.

We use pre-built Docker images available at DockerHub, so the user does not have to build new Docker images. In our example, we use a MySQL server for Metastore, but Postgres and MS SQL are also okay. This scenario should take 1 hour to 2 hours to complete, including the time for creating an EKS cluster.

For asking any questions, please visit MR3 Google Group or join MR3 Slack.

Install node, npm, ts-node

To execute TypeScript code,

Node.js node, Node package manager npm, and TypeScript execution engine ts-node

should be available.

In our example, we use the following versions:

$ node -v

v16.14.1

$ npm -v

8.5.0

$ ts-node --version

v10.7.0

Installation

Download an MR3 release containing TypeScript code:

$ git clone https://github.com/mr3project/mr3-run-k8s.git

$ cd mr3-run-k8s/typescript/

$ git checkout release-1.11-hive3

$ git clone https://github.com/mr3project/mr3-run-k8s.git

$ cd mr3-run-k8s/typescript/

Install dependency modules:

$ npm install --save uuid

$ npm install --save js-yaml

$ npm install --save typescript

$ npm install --save es6-template-strings

$ npm install --save @types/node

$ npm install --save @tsconfig/node12

$ npm install --save @types/js-yaml

$ npm install --save @types/uuid

Change to the working directory and create a symbolic link:

$ cd src/aws/run/

$ ln -s ../../server server

Overview

We specify configuration parameters for all the components

in a single TypeScript file run.ts.

$ vi run.ts

const eksConf: eks.T = ...

const autoscalerConf: autoscaler.T = ...

const serviceConf: service.T = ...

const efsConf: efs.T = ...

const appsConf: apps.T = ...

const driverEnv: driver.T = ...

After updating run.ts, we execute ts-node to generate YAML files:

$ ts-node run.ts

$ ls *.yaml

apps.yaml autoscaler.yaml efs.yaml eks-cluster.yaml service.yaml spark1.yaml

We execute ts-node several times.

First we set eksConf and execute ts-node.

We use the following file from the first execution.

eks-cluster.yamlis used as an argument foreksctlto create an EKS cluster.

Next we set autoscalerConf and serviceConf,

and execute ts-node.

We use the following files from the second execution.

autoscaler.yamlis used to create Kubernetes Autoscaler.service.yamlis used to create two LoadBalancer services.

Then we set efsConf and execute ts-node.

We use the following file from the third execution.

efs.yamlis used to mount EFS.

Finally we set appsConf and driverEnv,

and execute ts-node.

We use the following files from the last execution.

apps.yamlis used to create all the components.spark1.yamlis used to create a Spark driver Pod.

If a wrong parameter is given or an inconsistency between parameters is detected,

we get an error message instead.

In the following example,

we get an error message "Namespace is mandatory." on the field namespace.

$ ts-node run.ts

Execution failed: AssertionError [ERR_ASSERTION]: Input invalid: [{"field":"namespace","msg":"Namespace is mandatory."}]

Run failed: AssertionError [ERR_ASSERTION]: Input invalid: [{"field":"namespace","msg":"Namespace is mandatory."}]

IAM policy for autoscaling

Create an IAM policy for autoscaling as shown below.

Get the ARN (Amazon Resource Name) of the IAM policy.

In our example, we create an IAM policy called EKSAutoScalingWorkerPolicy.

$ vi EKSAutoScalingWorkerPolicy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeTags",

"ec2:DescribeInstanceTypes",

"ec2:DescribeLaunchTemplateVersions"

],

"Resource": ["*"]

},

{

"Effect": "Allow",

"Action": [

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeInstanceTypes",

"eks:DescribeNodegroup"

],

"Resource": ["*"]

}

]

}

$ aws iam create-policy --policy-name EKSAutoScalingWorkerPolicy --policy-document file://EKSAutoScalingWorkerPolicy.json

{

"Policy": {

...

"Arn": "arn:aws:iam::111111111111:policy/EKSAutoScalingWorkerPolicy",

...

IAM policy for accessing S3 buckets

Create an IAM policy

for allowing every Pod to access S3 buckets storing the warehouse and containing datasets.

Adjust the Action field to restrict the set of operations permitted to Pods.

Get the ARN of the IAM policy.

In our example, we create an IAM policy called MR3AccessS3.

$ vi MR3AccessS3.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:*"

],

"Resource": [

"arn:aws:s3:::hive-warehouse-dir",

"arn:aws:s3:::hive-warehouse-dir/*",

"arn:aws:s3:::hive-partitioned-1000-orc",

"arn:aws:s3:::hive-partitioned-1000-orc/*"

]

}

]

}

$ aws iam create-policy --policy-name MR3AccessS3 --policy-document file://MR3AccessS3.json

{

"Policy": {

...

"Arn": "arn:aws:iam::111111111111:policy/MR3AccessS3",

...

eksConf: eks.T

We create a Kubernetes cluster hive-mr3.

We create an EKS cluster in the region ap-northeast-2 and the zone ap-northeast-2a.

name: "hive-mr3",

region: "ap-northeast-2",

zone: "ap-northeast-2a",

We create a master node group hive-mr3-master for all the components except MR3 ContainerWorkers.

We use m5d.4xlarge for the instance type of the master node group.

We create 2 nodes in the master node group.

To add more nodes, the user should manually add new nodes in the node group.

masterNodeGroup: "hive-mr3-master",

masterInstanceType: "m5d.4xlarge",

masterCapacity: 2,

We create a worker node group hive-mr3-worker for MR3 ContainerWorkers.

We use m5d.4xlarge for the instance type of the worker node group.

The worker node group uses autoscaling.

In our example, the worker node group starts with zero nodes.

It uses up to 4 on-demand instances and up to 8 on-demand/spot instances.

workerNodeGroup: "hive-mr3-worker",

workerInstanceType: "m5d.4xlarge",

workerMinCapacityOnDemand: 0,

workerMaxCapacityOnDemand: 4,

workerMaxCapacityTotal: 8,

autoscalingWorkerPolicy: "arn:aws:iam::111111111111:policy/EKSAutoScalingWorkerPolicy",

accessS3Policy: "arn:aws:iam::111111111111:policy/MR3AccessS3",

- autoscalingWorkerPolicy uses the ARN of the IAM policy for autoscaling.

- accessS3Policy uses the ARN of the IAM policy for accessing S3 buckets.

We attach a label roles: masters in every master node,

and a label roles: workers in every worker node.

masterLabelRoles: "masters",

workerLabelRoles: "workers",

We specify the resources for HiveServer2, Metastore, MR3 DAGAppMaster for Hive, Ranger, Superset, and MR3 DAGAppMaster for Spark.

hiveResources: { cpu: 4, memoryInMb: 16 * 1024 },

metastoreResources: { cpu: 4, memoryInMb: 16 * 1024 },

mr3MasterResources: { cpu: 6, memoryInMb: 20 * 1024 },

rangerResources: { cpu: 2, memoryInMb: 6 * 1024 },

supersetResources: { cpu: 2, memoryInMb: 10 * 1024 },

sparkmr3Resources: { cpu: 6, memoryInMb: 20 * 1024 }

Creating an EKS cluster

Execute ts-node to generate YAML files.

Execute eksctl with eks-cluster.yaml.

$ ts-node run.ts

$ eksctl create cluster -f eks-cluster.yaml

2022-08-21 22:25:29 [ℹ] eksctl version 0.86.0

2022-08-21 22:25:29 [ℹ] using region ap-northeast-2

2022-08-21 22:25:29 [ℹ] setting availability zones to [ap-northeast-2a ap-northeast-2b ap-northeast-2d]

..

2022-08-21 22:42:40 [✔] EKS cluster "hive-mr3" in "ap-northeast-2" region is ready

The user can verify that two master nodes are available in the EKS cluster.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-192-168-15-85.ap-northeast-2.compute.internal Ready <none> 74s v1.21.14-eks-ba74326

ip-192-168-24-94.ap-northeast-2.compute.internal Ready <none> 72s v1.21.14-eks-ba74326

Get the public IP addresses of the master nodes which we may need when checking access to the database servers for Metastore and Ranger.

$ kubectl describe node ip-192-168-15-85.ap-northeast-2.compute.internal | grep -e InternalIP -e ExternalIP

InternalIP: 192.168.15.85

ExternalIP: 52.78.131.218

$ kubectl describe node ip-192-168-24-94.ap-northeast-2.compute.internal | grep -e InternalIP -e ExternalIP

InternalIP: 192.168.24.94

ExternalIP: 13.209.83.3

autoscalerConf: autoscaler.T

autoscalingScaleDownDelayAfterAddMin: 5,

autoscalingScaleDownUnneededTimeMin: 1

- autoscalingScaleDownDelayAfterAddMin specifies the delay (in minutes) for removing an existing worker node after adding a new worker node.

- autoscalingScaleDownUnneededTimeMin specifies the time to wait (in minutes) before removing an unneeded worker node with no ContainerWorker Pod.

serviceConf: service.T

We use hivemr3 for the Kubernetes namespace.

We do not use HTTPS for connecting to Apache server which serves as a gateway to Ranger, MR3-UI, Grafana, Superset, and Spark UI.

In order to use HTTPS,

the user should provide an SSL certificate created with AWS Certificate Manager

in an optional field sslCertificateArn.

namespace: "hivemr3",

useHttps: false

Starting Kubernetes Autoscaler

Execute ts-node to generate YAML files.

Execute kubectl with autoscaler.yaml to start the Kubernetes Autoscaler.

$ ts-node run.ts

$ kubectl apply -f autoscaler.yaml

serviceaccount/cluster-autoscaler created

deployment.apps/cluster-autoscaler created

clusterrole.rbac.authorization.k8s.io/cluster-autoscaler created

clusterrolebinding.rbac.authorization.k8s.io/cluster-autoscaler created

role.rbac.authorization.k8s.io/cluster-autoscaler created

rolebinding.rbac.authorization.k8s.io/cluster-autoscaler created

The user can check that the Kubernetes Autoscaler has started properly.

$ kubectl get pods -n kube-system | grep autoscaler

cluster-autoscaler-7cb499575f-4h8tn 1/1 Running 0 16s

Creating LoadBalancer services

Execute kubectl with service.yaml

to create two LoadBalancer services.

Later

the LoadBalancer with LoadBalancerPort 8080 is connected to Apache server

while the LoadBalancer with LoadBalancerPort 10001/9852 is connect to HiveServer2.

$ kubectl create -f service.yaml

namespace/hivemr3 created

service/apache created

service/hiveserver2 created

$ aws elb describe-load-balancers

...

"CanonicalHostedZoneName": "a9b31625797fa420c982465990a335f6-1934820481.ap-northeast-2.elb.amazonaws.com",

"CanonicalHostedZoneNameID": "ZWKZPGTI48KDX",

"ListenerDescriptions": [

{

"Listener": {

"Protocol": "TCP",

"LoadBalancerPort": 8080,

...

"CanonicalHostedZoneName": "a4a3c9cf4b2244671bc986fc4dade227-356643462.ap-northeast-2.elb.amazonaws.com",

"CanonicalHostedZoneNameID": "ZWKZPGTI48KDX",

"ListenerDescriptions": [

{

"Listener": {

"Protocol": "TCP",

"LoadBalancerPort": 10001,

...

Get the address and host name of each service.

$ nslookup a9b31625797fa420c982465990a335f6-1934820481.ap-northeast-2.elb.amazonaws.com

...

Name: a9b31625797fa420c982465990a335f6-1934820481.ap-northeast-2.elb.amazonaws.com

Address: 15.164.153.137

Name: a9b31625797fa420c982465990a335f6-1934820481.ap-northeast-2.elb.amazonaws.com

Address: 15.165.246.187

$ nslookup a4a3c9cf4b2244671bc986fc4dade227-356643462.ap-northeast-2.elb.amazonaws.com

...

Name: a4a3c9cf4b2244671bc986fc4dade227-356643462.ap-northeast-2.elb.amazonaws.com

Address: 15.164.6.96

Name: a4a3c9cf4b2244671bc986fc4dade227-356643462.ap-northeast-2.elb.amazonaws.com

Address: 3.39.50.65

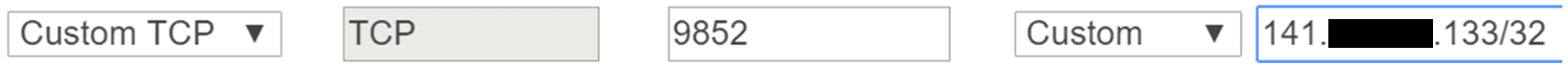

Configuring the LoadBalancers

Get the security group associated with the LoadBalancers.

If necessary, edit the inbound rule in order to restrict the source IP addresses

(e.g., by changing the source from 0.0.0.0/0 to (IP address)/32).

The LoadBalancer for HiveServer2 disconnects Beeline showing no activity for the idle timeout period, which is 60 seconds by default. The user may want to increase the idle timeout period, e.g., to 1200 seconds. Otherwise Beeline loses the connection to HiveServer2 even after a brief period of inactivity.

Creating EFS

Assuming that the name of the EKS cluster is hive-mr3

(specified in the field name in eksConf),

get the VPC ID of CloudFormation eksctl-hive-mr3-eks-cluster.

$ aws ec2 describe-vpcs --filter Name=tag:aws:cloudformation:stack-name,Values=eksctl-hive-mr3-cluster --query "Vpcs[*].[VpcId]"

[

[

"vpc-0514cc58f49219037"

]

]

$ VPCID=vpc-0514cc58f49219037

Get the public subnet ID of CloudFormation eksctl-hive-mr3-eks-cluster.

$ aws ec2 describe-subnets --filter Name=vpc-id,Values=$VPCID Name=availability-zone,Values=ap-northeast-2a Name=tag:aws:cloudformation:stack-name,Values=eksctl-hive-mr3-cluster Name=tag:Name,Values="*Public*" --query "Subnets[*].[SubnetId]"

[

[

"subnet-0dc4f1d62f8eaf27a"

]

]

$ SUBNETID=subnet-0dc4f1d62f8eaf27a

Get the ID of the security group for the EKS cluster

that matches the pattern eksctl-hive-mr3-eks-cluster-ClusterSharedNodeSecurityGroup-*.

$ aws ec2 describe-security-groups --filters Name=vpc-id,Values=$VPCID Name=group-name,Values="eksctl-hive-mr3-cluster-ClusterSharedNodeSecurityGroup-*" --query "SecurityGroups[*].[GroupName,GroupId]"

[

[

"eksctl-hive-mr3-cluster-ClusterSharedNodeSecurityGroup-LDAQGXV3RGXF",

"sg-06a5105157555c5bc"

]

]

$ SGROUPALL=sg-06a5105157555c5bc

Create EFS in the Availability Zone specified in the field zone in eksConf.

Get the file system ID of EFS.

$ aws efs create-file-system --performance-mode generalPurpose --throughput-mode bursting --availability-zone-name ap-northeast-2a

...

"FileSystemId": "fs-00939cf3e2d140beb",

...

$ EFSID=fs-00939cf3e2d140beb

Create a mount target using the subnet ID of CloudFormation eksctl-hive-mr3-cluster

and the security group ID for the EKS cluster.

Get the mount target ID which is necessary when deleting the EKS cluster.

$ aws efs create-mount-target --file-system-id $EFSID --subnet-id $SUBNETID --security-groups $SGROUPALL

...

"MountTargetId": "fsmt-089a2abda1f02dc15",

...

$ MOUNTID=fsmt-089a2abda1f02dc15

efsConf: efs.T

efsId: "fs-00939cf3e2d140beb"

- efsId specifies the file system ID of EFS.

Mouting EFS

Execute ts-node to generate YAML files.

Execute kubectl with efs.yaml to mount EFS.

$ ts-node run.ts

$ kubectl create -f efs.yaml

serviceaccount/efs-provisioner created

configmap/efs-provisioner created

deployment.apps/efs-provisioner created

storageclass.storage.k8s.io/aws-efs created

clusterrole.rbac.authorization.k8s.io/efs-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-efs-provisioner created

role.rbac.authorization.k8s.io/leader-locking-efs-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-efs-provisioner created

The user can find a new StorageClass aws-efs

and a new Pod in the namespace hivemr3.

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

aws-efs example.com/aws-efs Delete Immediate false 14s

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 19m

$ kubectl get pods -n hivemr3

NAME READY STATUS RESTARTS AGE

efs-provisioner-69764fcd97-nw6pl 1/1 Running 0 12s

Access to the database servers for Metastore and Ranger

Check if the database servers for Metastore and Ranger are accessible from the master nodes. If the database server is running on Amazon AWS, the user may have to update its security group or VPC configuration.

appsConf: apps.T

warehouseDir: "s3a://data-warehouse/hivemr3",

persistentVolumeClaimStorageInGb: 100,

externalIpHostname: "a9b31625797fa420c982465990a335f6-1934820481.ap-northeast-2.elb.amazonaws.com",

hiveserver2Ip: "15.164.6.96",

hiveserver2IpHostname: "hs2host",

- warehouseDir should be set to the S3 bucket storing the warehouse.

Note that for using S3, we should use prefix

s3a, nots3. - persistentVolumeClaimStorageInGb specifies the amount of storage in the PersistentVolume to use.

- externalIpHostname should be set to the host name of the LoadBalancer service for Apache server.

- hiveserver2Ip should be set to the IP address of the LoadBalancer service for HiveServer2 (to which clients can connect from the outside of the Kubernetes cluster).

- hiveserver2IpHostname specifies an alias for the host name for HiveServer2. (The alias is used only internally for Kerberos instance and SSL encryption.)

dbType: "MYSQL",

databaseHost: "1.1.1.1",

databaseName: "hive3mr3",

userName: "root",

password: "passwd",

initSchema: true,

- We use a MySQL server for Metastore whose address is 1.1.1.1.

- databaseName specifies the name of the database for Hive inside the MySQL server.

- userName and password specify the user name and password of the MySQL server for Metastore.

- To initialize database schema, initSchema is set to true.

service: "HIVE_service",

dbFlavor: "MYSQL",

dbRootUser: "root",

dbRootPassword: "passwd",

dbHost: "1.1.1.1",

dbPassword: "password",

adminPassword: "rangeradmin1",

- In our example, we use a Ranger service

HIVE_service. - We use a MySQL server for Ranger whose address is 1.1.1.1.

- dbRootUser and dbRootPassword specify the user name and password of the MySQL server for Ranger.

- dbPassword specifies a password for the user

rangeradminwhich is use only internally by Ranger. - adminPassword specifies the initial password for the user

adminon the Ranger Admin UI.

For using a MySQL server,

Ranger automatically downloads a MySQL connector

from https://cdn.mysql.com/Downloads/Connector-J/mysql-connector-java-8.0.28.tar.gz.

The user should check the compatibility between the server and the connector.

We register four names for Spark drivers: spark1, spark2, spark3, spark4.

When a Spark driver with one of these names is created,

its UI page is accessible via Apache server.

(Creating Spark drivers with different names is allowed,

but their UI pages are not accessible via Apache server.)

driverNameStr: "spark1,spark2,spark3,spark4",

driverEnv: driver.T

We create a YAML file spark1.yaml for creating a Spark driver Pod

with 2 CPU cores and 8GB of memory.

name: "spark1",

resources: {

cpu: 2,

memoryInMb: 8 * 1024

}

Running Hive on MR3

Execute ts-node to generate YAML files.

Execute kubectl with apps.yaml to start Hive on MR3.

$ ts-node run.ts

$ kubectl create -f apps.yaml

The user can find that a PersistentVolumeClaim workdir-pvc is in use.

$ kubectl get pvc -n hivemr3

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

workdir-pvc Bound pvc-683919a0-17d3-456e-945a-7eb58b507a16 100Gi RWX aws-efs 7s

If successful, the user can find a total of 8 Pods:

- Apache server

- Public HiveServer2

- Internal HiveServer2

- Metastore

- DAGAppMaster for Hive

- Ranger

- Superset

- MR3-UI/Grafana (which also runs a Timeline Server and Prometheus)

The DAGAppMaster Pod may restart a few times if the MR3-UI/Grafana Pod is not initialized quickly. In our example, all the Pods become ready in 2 minutes, but it may take longer because of the time for downloading Docker images.

$ kubectl get pods -n hivemr3

NAME READY STATUS RESTARTS AGE

apache-0 1/1 Running 0 2m

efs-provisioner-69764fcd97-nw6pl 1/1 Running 0 14m

hiveserver2-6cc8f87878-5ld5d 1/1 Running 0 2m

hiveserver2-internal-5789974bc8-t8q2j 1/1 Running 0 2m

metastore-0 1/1 Running 0 2m

mr3master-9261-0-6c578c87dd-ldmxl 1/1 Running 0 106s

ranger-0 2/2 Running 0 2m

superset-0 1/1 Running 0 2m

timeline-0 4/4 Running 0 2m

The user can find that several directories have been created under the PersistentVolume.

Inside the HiveServer2 Pod,

the PersistentVolume is mounted in the directory /opt/mr3-run/work-dir/.

$ kubectl exec -n hivemr3 -it hiveserver2-6cc8f87878-5ld5d -- /bin/bash

hive@hiveserver2-6cc8f87878-5ld5d:/opt/mr3-run/hive$ ls /opt/mr3-run/work-dir/

apache2.log ats grafana hive httpd.pid log prometheus ranger superset

Using Hive/Spark on MR3

The user can use all the components exactly in the same way as on Kubernetes. We refer the user to the following pages for details.

- Creating a Ranger service

- Accessing MR3-UI, Grafana, Superset

- Running queries

- Running Spark on MR3

- Running two Spark applications

- Limitations in accessing Hive Metastore from Spark SQL

- Stopping Hive/Spark on MR3

Deleting the EKS cluster

Because of the additional components configured manually, it take a few extra steps to delete the EKS cluster. In order to delete the EKS cluster, proceed in the following order.

- Delete all the components.

$ kubectl delete -f apps.yaml - Delete resources created automatically by Hive on MR3.

$ kubectl -n hivemr3 delete configmap mr3conf-configmap-master mr3conf-configmap-worker $ kubectl -n hivemr3 delete svc service-master-9261-0 service-worker $ kubectl -n hivemr3 delete deployment --all $ kubectl -n hivemr3 delete pods --all - Delete the resources for EFS.

$ kubectl delete -f efs.yaml - Delete the services.

$ kubectl delete -f service.yaml - Remove the mount target for EFS.

$ aws efs delete-mount-target --mount-target-id $MOUNTID - Delete EFS if necessary. Note that the same EFS can be reused for the next installation of Hive on MR3.

$ aws efs delete-file-system --file-system-id $EFSID - Stop Kubernetes Autoscaler

$ kubectl delete -f autoscaler.yaml - Delete EKS with

eksctl.$ eksctl delete cluster -f eks-cluster.yaml ... 2022-08-22 00:04:30 [✔] all cluster resources were deleted

If the last command fails, the user should delete the EKS cluster manually. Proceed in the following order on the AWS console.

- Delete security groups manually.

- Delete the NAT gateway created for the EKS cluster, delete the VPC, and then delete the Elastic IP address.

- Delete LoadBalancers.

- Delete CloudFormations.