A DAGAppMaster runs in one of the following four modes:

- LocalThread mode: A DAGAppMaster starts as a new thread inside the MR3Client.

- LocalProcess mode: A DAGAppMaster starts as a new process on the same machine where the MR3Client is running.

- Yarn mode: A DAGAppMaster starts as a new container in the Hadoop cluster.

- Kubernetes mode: A DAGAppMaster starts as a pod in a Kubernetes cluster.

A ContainerWorker runs in one of the following four modes:

- Local mode: A ContainerWorker starts as a thread inside the DAGAppMaster.

- Yarn mode: A ContainerWorker starts as a container in a Hadoop cluster.

- Kubernetes mode: A ContainerWorker starts as a pod in a Kubernetes cluster.

- Process mode: A ContainerWorker starts as an ordinary process.

Combinations of DAGAppMaster and ContainerWorker modes

MR3 support many combinations of DAGAppMaster and ContainerWorker modes. For example, LocalProcess mode allows us to run the DAGAppMaster on a dedicated machine outside the Hadoop cluster (as an unmanaged ApplicationMaster), which is particularly useful when running many concurrent DAGs in the same DAGAppMaster. As another example, a DAGAppMaster in Yarn mode can run its ContainerWorkers in Local mode, similarly to the Uber mode of Hadoop. Moreover a DAGAppMaster can mix ContainerWorkers with different modes. For example, long-running ContainerWorkers can run in Yarn mode while short-lived ContainerWorkers can run in Local mode.

The following list shows all the combinations permitted by MR3. For LocalProcess mode in the Kubernetes cluster, DAGAppMaster should run inside the Kubernetes cluster. For more details on running MR3 on Kubernetes, see Running MR3Client inside/outside Kubernetes.

| Cluster | DAGAppMaster | ContainerWorker |

|---|---|---|

| Hadoop/Kubernetes/Stanalone | LocalThread | Local |

| LocalProcess | Local | |

| Hadoop | LocalThread | Yarn |

| LocalProcess | Yarn | |

| Yarn | Local | |

| Yarn | Yarn | |

| Kubernetes | LocalThread | Kubernetes |

| LocalProcess (only inside the cluster) | Kubernetes | |

| Kubernetes | Local | |

| Kubernetes | Kubernetes | |

| Standalone | LocalThread | Process |

| LocalProcess | Process |

On Hadoop

Here are schematic descriptions of six combinations in the Hadoop cluster:

-

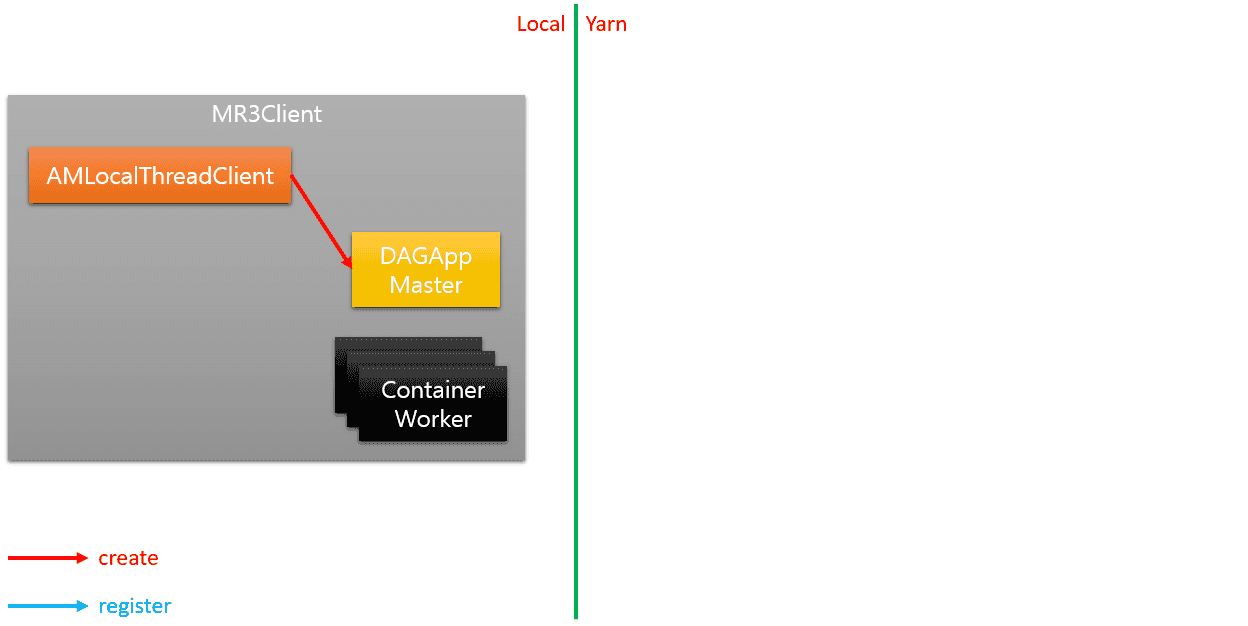

DAGAppMaster in LocalThread mode, ContainerWorker in Local mode

-

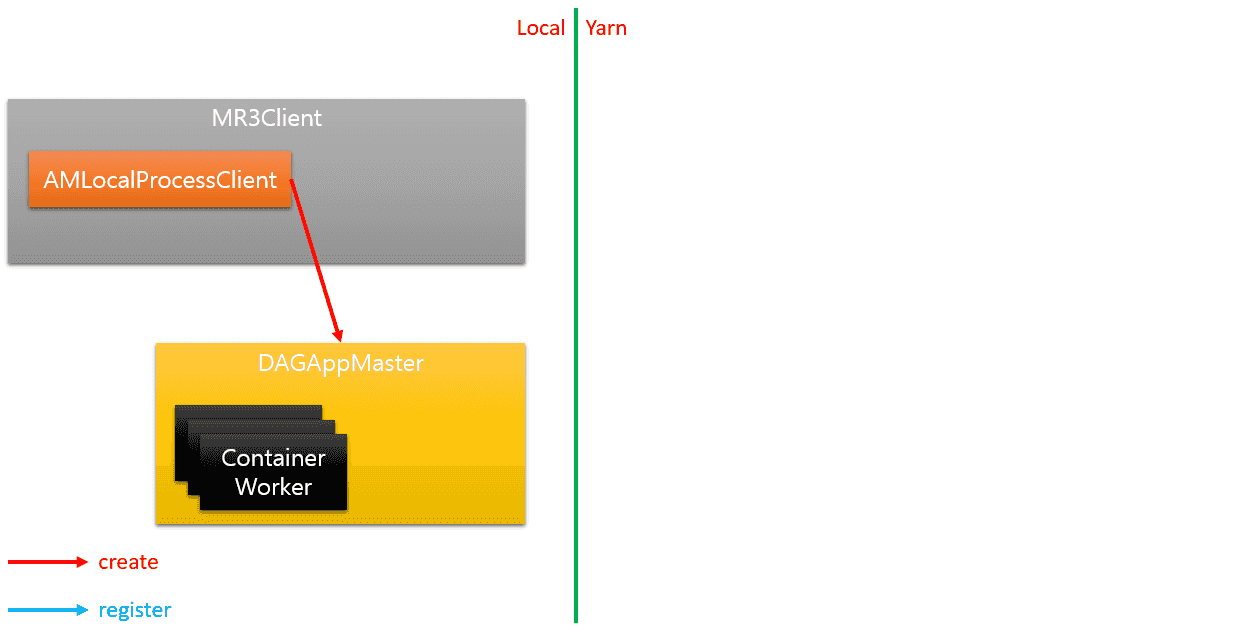

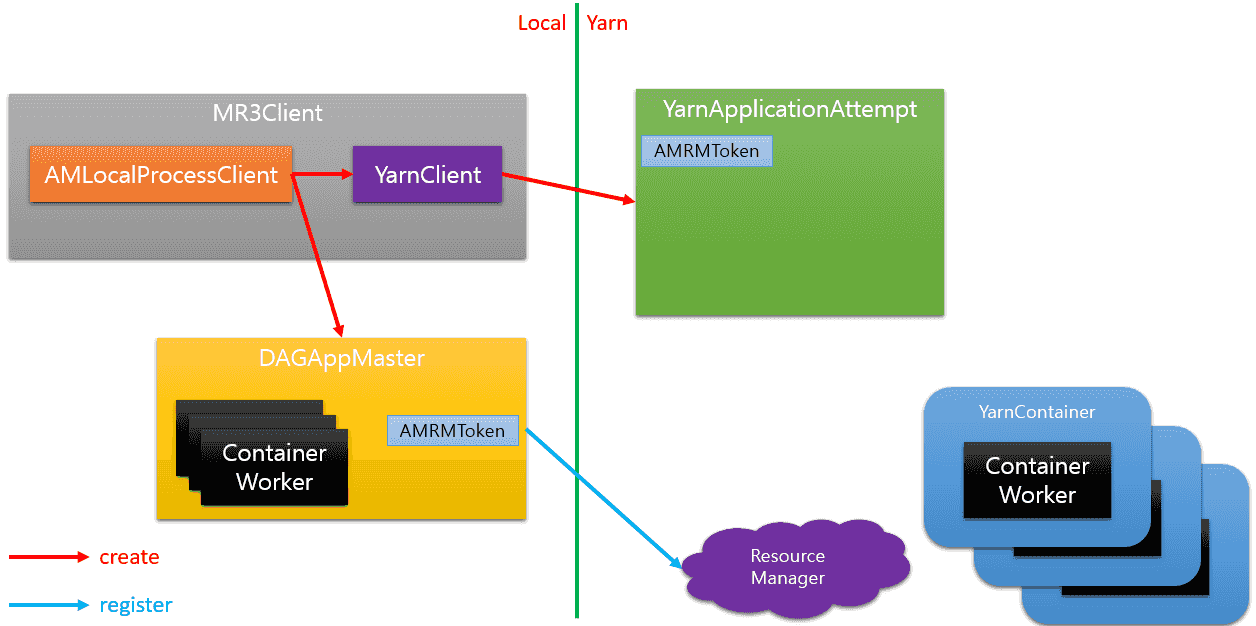

DAGAppMaster in LocalProcess mode, ContainerWorker in Local mode

-

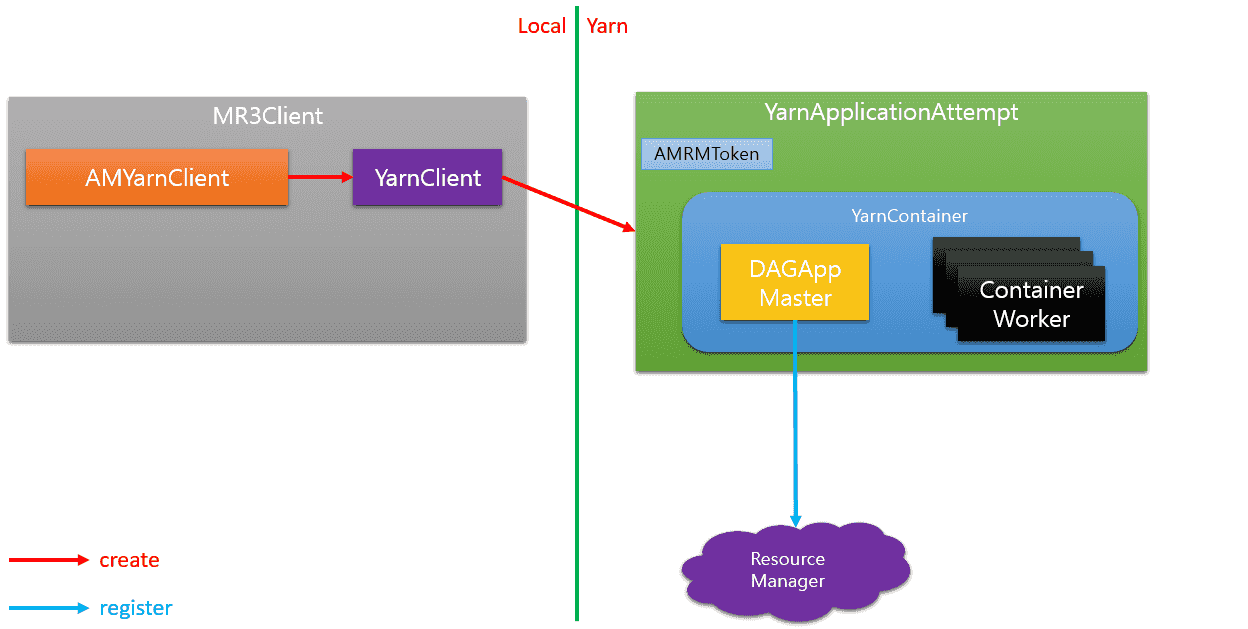

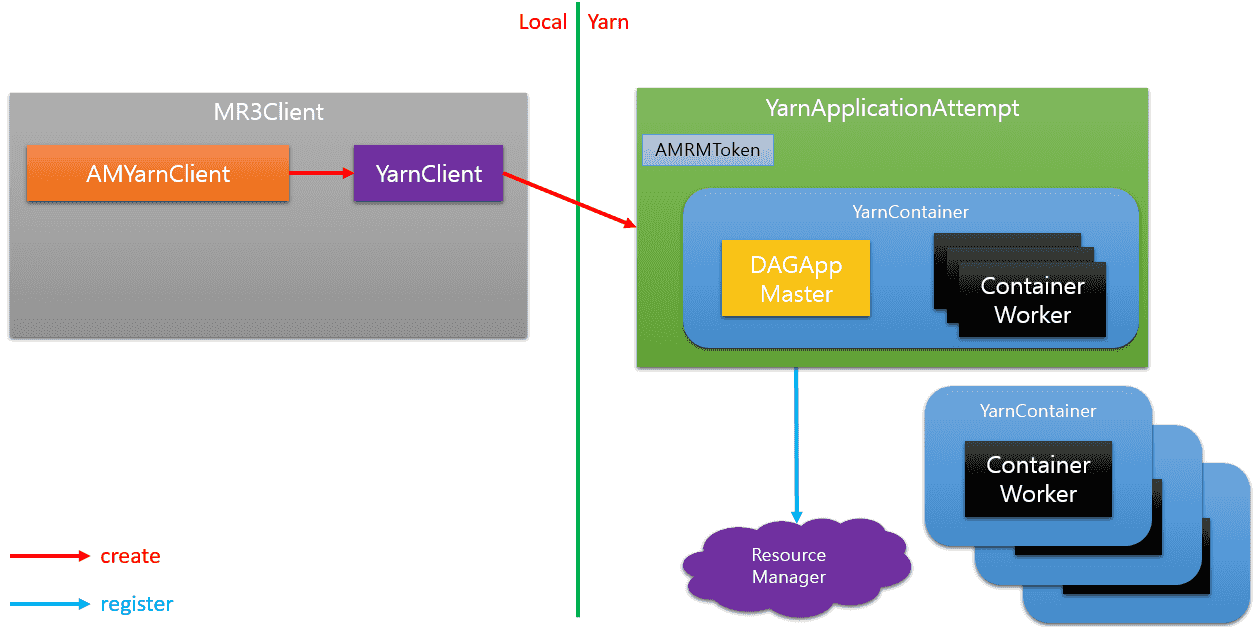

DAGAppMaster in Yarn mode, ContainerWorker in Local mode

-

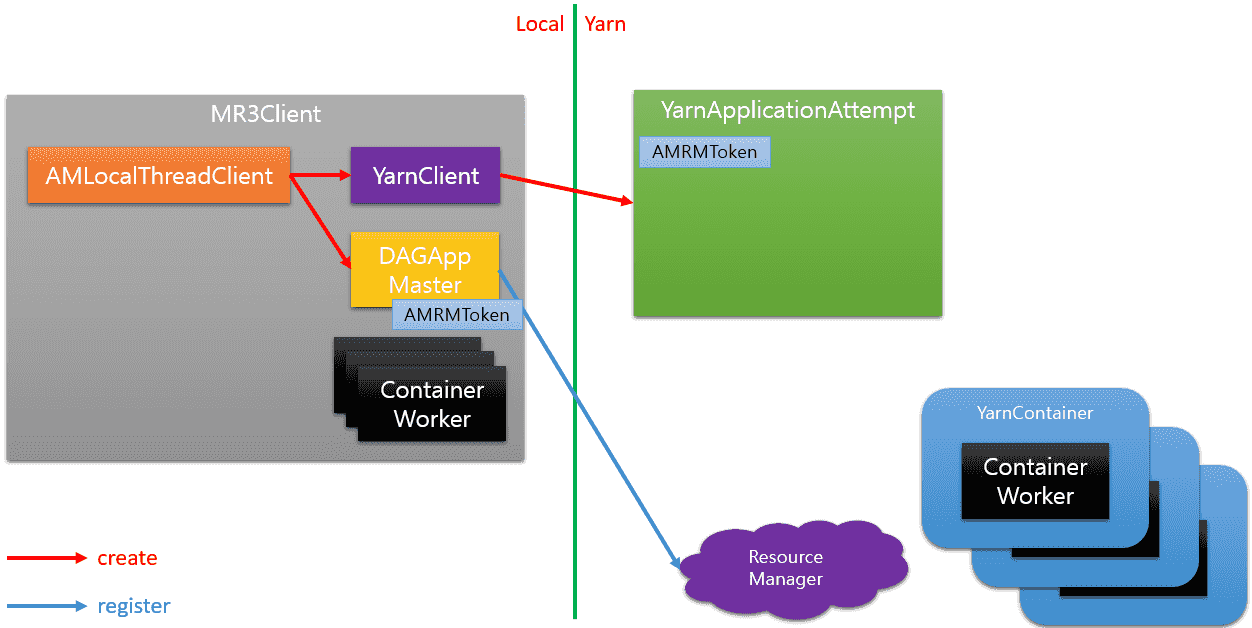

DAGAppMaster in LocalThread mode, ContainerWorker in Yarn mode

-

DAGAppMaster in LocalProcess mode, ContainerWorker in Yarn mode

-

DAGAppMaster in Yarn mode, ContainerWorker in Yarn mode

On Kubernetes

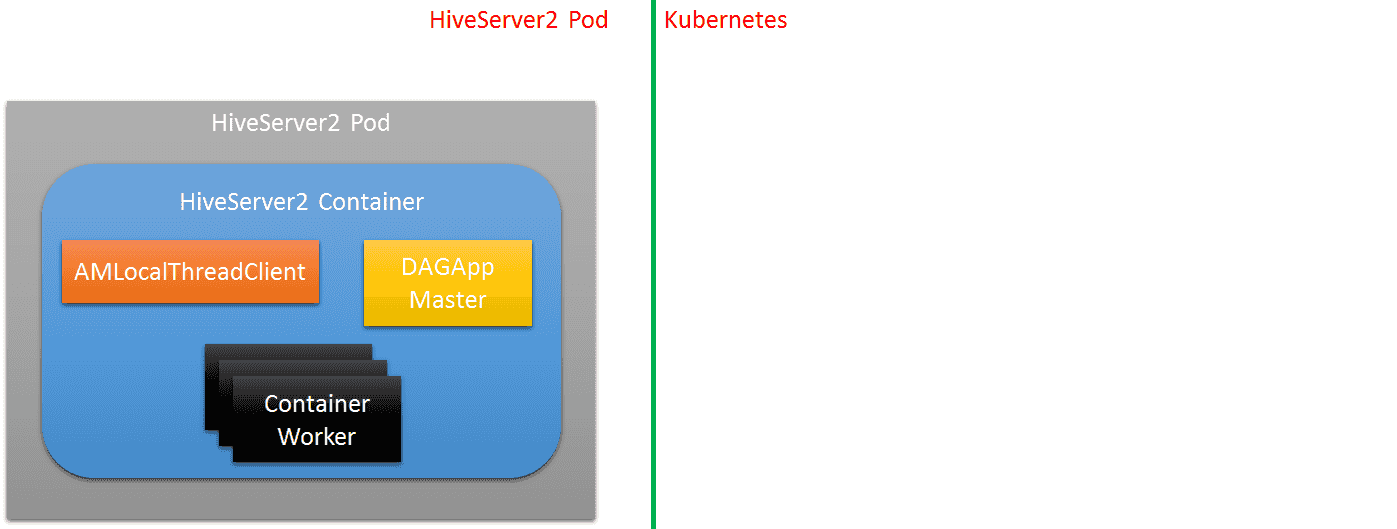

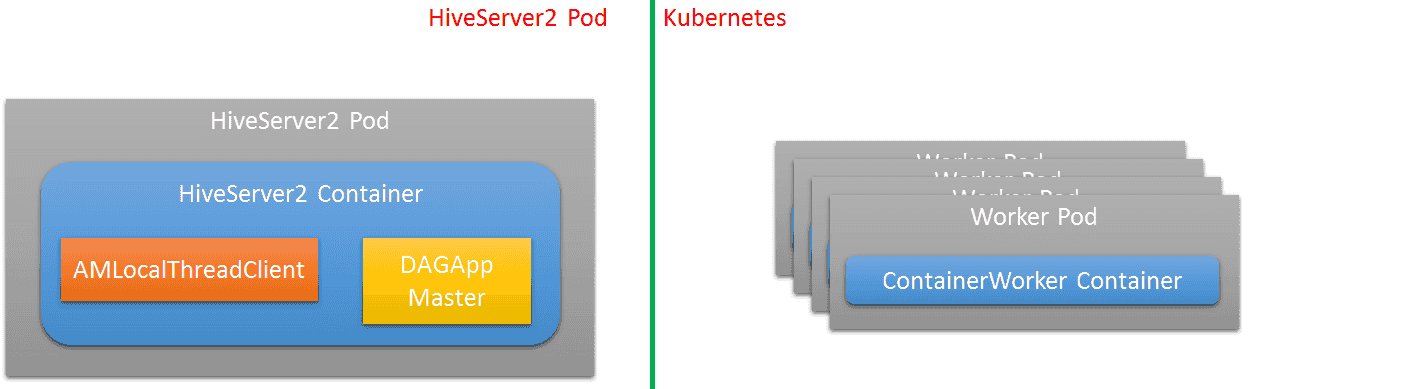

Here are schematic descriptions of the six combinations on Kubernetes where we assume Hive on MR3. In the current implementation, HiveServer2 always starts in a Pod.

-

DAGAppMaster in LocalThread mode, ContainerWorker in Local mode

-

DAGAppMaster in LocalThread mode, ContainerWorker in Kubernetes mode

-

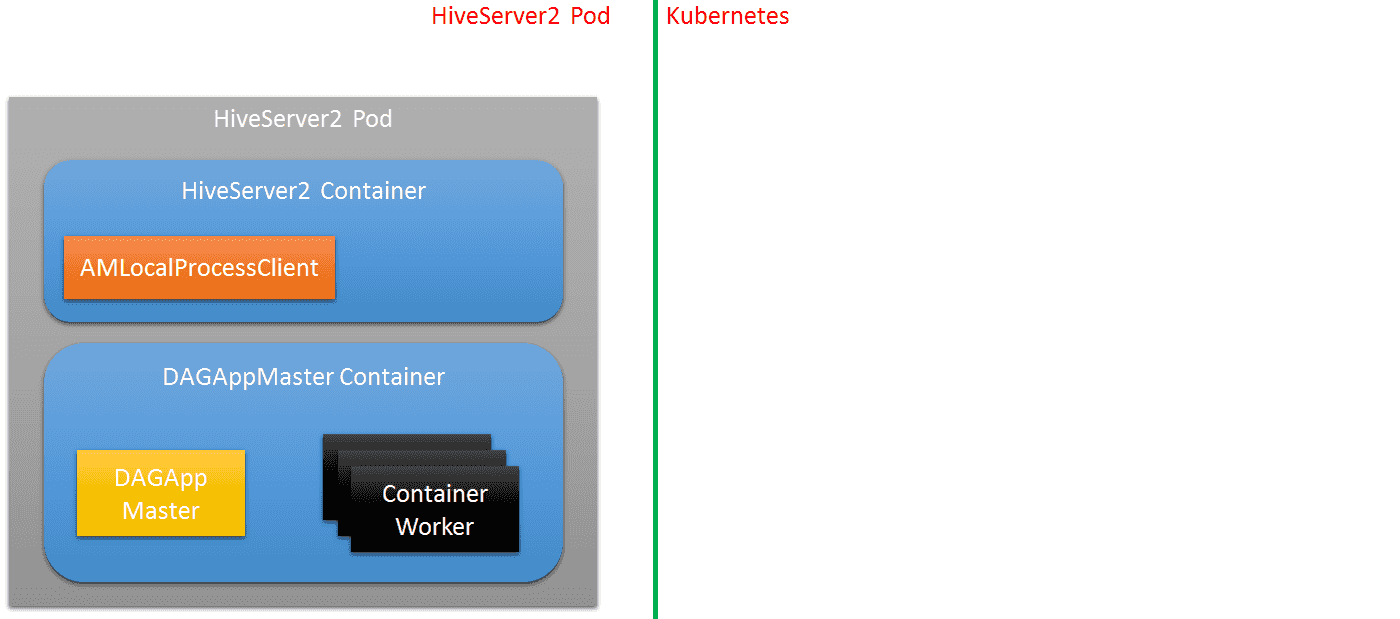

DAGAppMaster in LocalProcess mode, ContainerWorker in Local mode

-

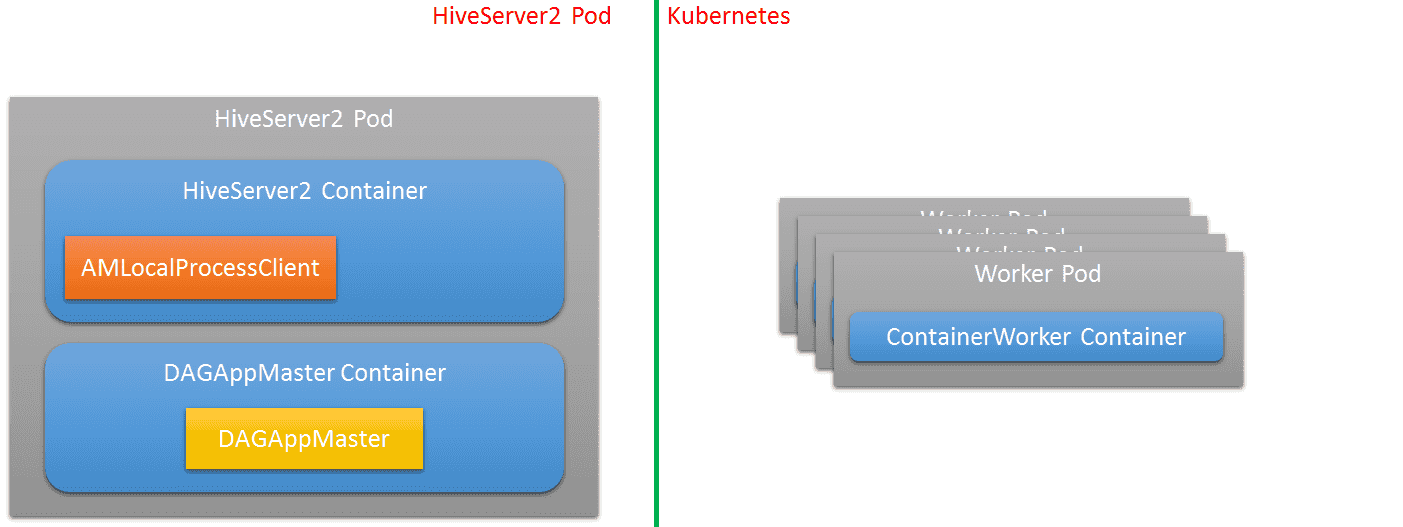

DAGAppMaster in LocalProcess mode, ContainerWorker in Kubernetes mode

-

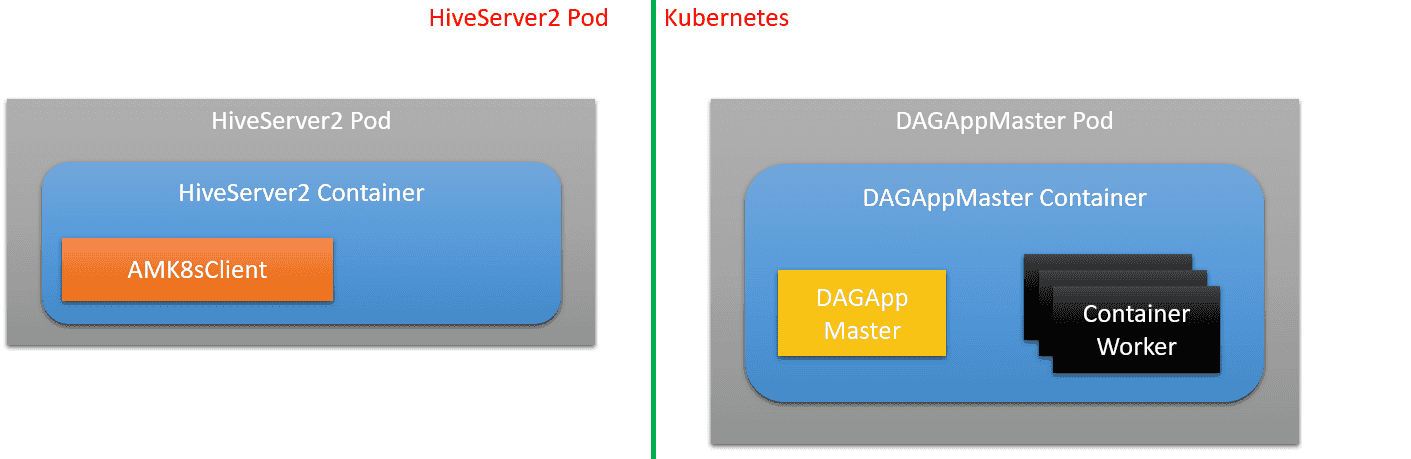

DAGAppMaster in Kubernetes mode, ContainerWorker in Local mode

-

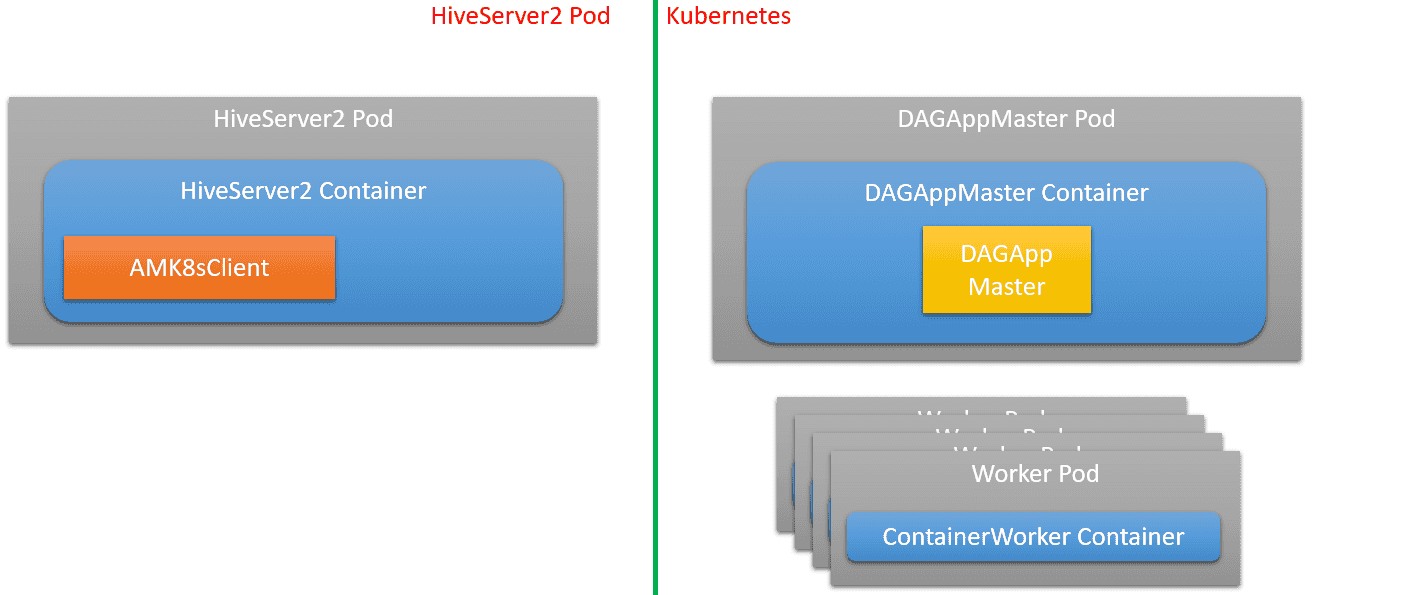

DAGAppMaster in Kubernetes mode, ContainerWorker in Kubernetes mode

Specifying DAGAppMaster/ContainerWorker mode

A DAGAppMaster mode can be specified with key mr3.master.mode in mr3-site.xml:

- LocalThread mode:

mr3.master.mode=local-thread - LocalProcess mode:

mr3.master.mode=local-process - Yarn mode:

mr3.master.mode=yarn - Kubernetes mode:

mr3.master.mode=kubernetes

A ContainerWorker mode can be specified with key mr3.am.worker.mode in mr3-site.xml:

- Local mode:

mr3.am.worker.mode=local. In this case, all ContainerWorkers run in Local mode. - Yarn mode:

mr3.am.worker.mode=yarn. In this case, a ContainerWorker can run in either Local or Yarn mode (which is determined by its ContainerGroup). - Kubernetes mode:

mr3.am.worker.mode=kubernetes. In this case, a ContainerWorker can run in either Local or Kubernetes mode (which is determined by its ContainerGroup). - Process mode:

mr3.am.worker.mode=process. In this case, a ContainerWorker can run in either Local or Process mode (which is determined by its ContainerGroup).

No LocalThread/LocalProcess mode in a secure Hadoop cluster

A noteworthy limitation is that a DAGAppMaster in LocalThread or LocalProcess mode cannot start in a Kerberos-enabled secure Hadoop cluster.

This is not so much a limitation of MR3 as a missing feature in Yarn (YARN-2892),

which is necessary for an unmanaged ApplicationMaster to retrieve an AMRMToken.

Hence the user should not start a DAGAppMaster in LocalThread or LocalProcess mode in a secure Hadoop cluster.

(In Hive on MR3, do not use the --amprocess option in a secure cluster.)

If a DAGAppMaster should start in LocalThread or LocalProcess mode in a secure Hadoop cluster, ContainerWorkers should run only in Local mode.

Watch /tmp when using LocalThread or LocalProcess mode

An MR3Client creates staging directories under /tmp/<user name>/ on HDFS by default.

If, however, a DAGAppMaster is to run in LocalThread or LocalProcess mode while ContainerWorkers are to run in Local mode,

it creates staging directories under /tmp/<user name>/ on the local file system.

If /tmp/ happens to be full at the time of creating staging directories, Yarn erroneously concludes that HDFS is full, and then transitions to safe mode in which all actions are blocked.

Hence the user should watch /tmp/ on the local file system when using LocalThread or LocalProcess mode for DAGAppMasters.

A DAGAppMaster running in LocalThread or LocalProcess mode creates its working directory and logging directory under /tmp/<user name>/ on the local file system.

The user can specify with key mr3.am.delete.local.working-dir in mr3-site.xml whether or not to delete the working directory when the DAGAppMaster terminates.

The logging directory, however, is not automatically deleted when the DAGAppMaster terminates.

Hence the user is responsible for cleaning these directories in order to prevent Yarn from transitioning to safe mode.